| Разработчик: | Huibert Aalbers (2) | ||

| Цена: | Бесплатно | ||

| Рейтинги: | 0 | ||

| Отзывы: | 0 Написать отзыв | ||

| Списки: | 0 + 0 | ||

| Очки: | 0 + 0 ¡ | ||

| Mac App Store | |||

Описание

LocalIntelligence is a powerful application that brings the power of large language models directly to your desktop through Ollama. Chat with AI models privately and securely—everything runs locally on your device, with no cloud dependency or data sharing.

Core Features

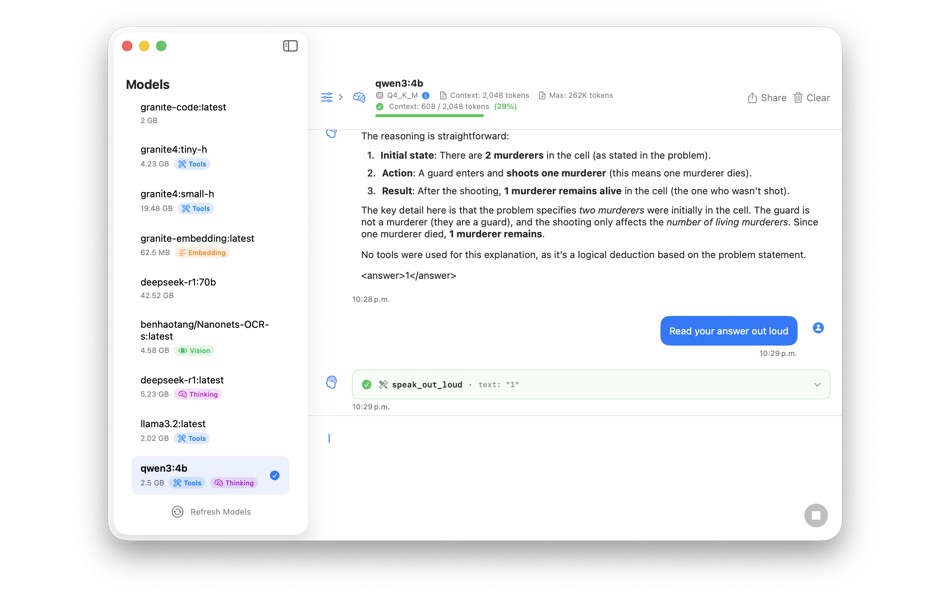

Ollama Integration

Connect seamlessly to your local Ollama instance. Browse and select from all your installed models, with automatic detection of model capabilities including vision, tools, thinking, and embedding support. Each model displays detailed runtime information including quantization level, context window size, and default generation parameters.

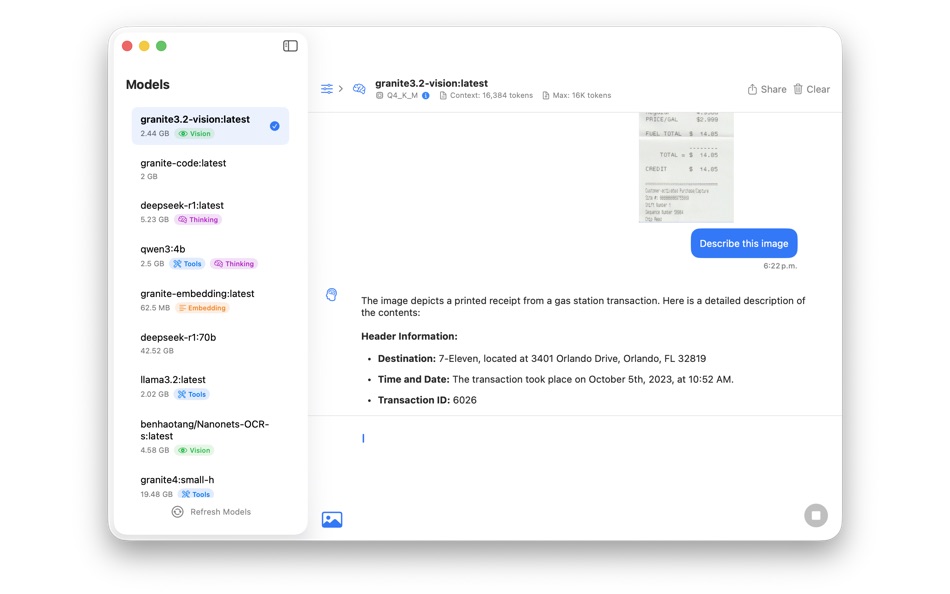

Vision Support

Vision-capable models can analyze images. Simply drag and drop an image onto the photo icon or use the file picker to attach photos, screenshots, or graphics. Ask questions about what you see, extract text, analyze diagrams, or get creative descriptions—all processed locally on your device.

MCP (Model Context Protocol) Integration

Extend your AI's capabilities with MCP tools. Connect to TCP/IP MCP servers. Tools are automatically discovered and made available to compatible models, enabling your AI to fetch real-time data, perform calculations, access APIs, and interact with external services. The app handles tool execution, result processing, and multi-turn conversations seamlessly.

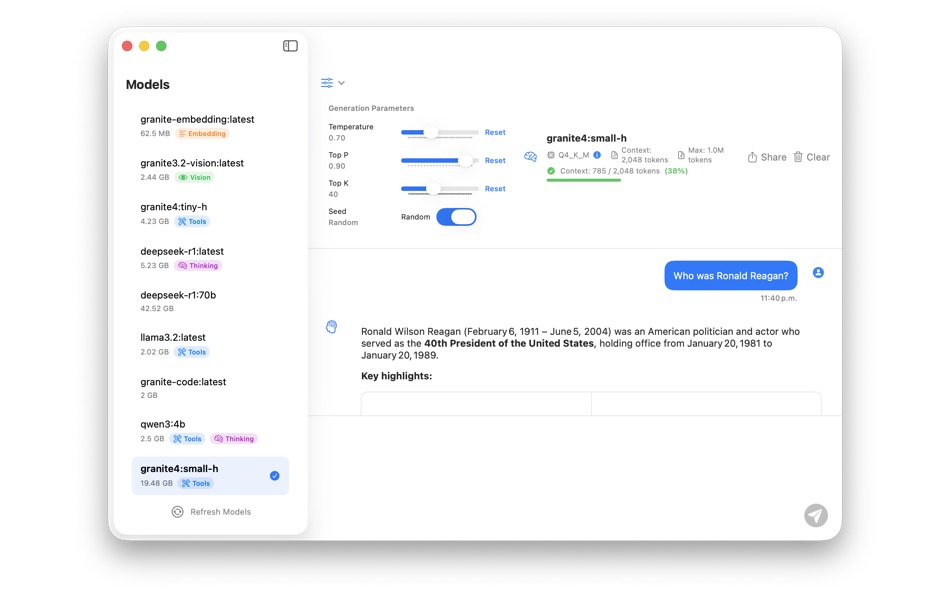

Fine-Tuned Control

Adjust generation parameters on the fly with an intuitive interface:

• Temperature: Control randomness and creativity (0.0 - 2.0)

• Top P: Nucleus sampling for vocabulary focus

• Top K: Limit token choices for consistency

• Seed: Set fixed seeds for reproducible outputs

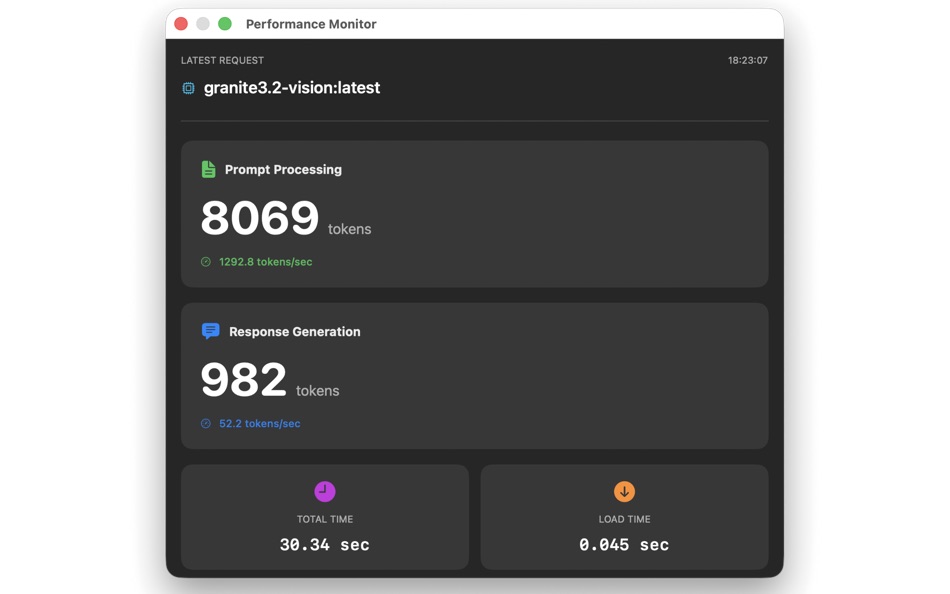

Performance Monitoring

Track model performance with detailed metrics (optional verbose mode):

• Prompt evaluation speed (tokens/second)

• Generation speed (tokens/second)

• Context window usage with visual indicator

• Load times and total duration

Conversation Management

• Export conversations in plain text or Markdown format

• Copy to clipboard or save as files

• Clear conversations with confirmation dialogs

• Context window tracking prevents truncation issues

• Model switching automatically clears conversations to prevent confusion

Modern, native experience:

• Clean, intuitive interface

• Dark mode support

• Keyboard shortcuts and native controls

• Smooth animations and transitions

• Efficient memory usage

Privacy & Security

• 100% local processing - no data leaves your Mac

• No telemetry or tracking

• Your conversations stay private

Advanced Features

Model Information

View comprehensive details about each model:

• Quantization type with explanatory guide (Q4_K_M, Q8_0, F16, etc.)

• Current and maximum context window sizes

• Parameter family and format

• Size and modification date

Context Window Management

Visual indicator shows real-time context usage:

• Automatic warnings when limits are reached

MCP Server Management

Configure and test MCP connections:

• Connect to remote HTTP/SSE servers

• Real-time connection testing with detailed diagnostics

• Visual status indicators (connected, failed, testing)

• Automatic tool discovery and listing

Tool Execution

When models with tool support call functions:

• Visual indication of executing tools

• Display of tool results inline

• Multi-turn conversations with context preservation

System Requirements

• Ollama installed and running locally

• Recommended: Apple Silicon for optimal performance

Perfect For

• Developers testing and debugging models

• Researchers exploring AI capabilities

• Privacy-conscious users who want local AI

• Anyone who wants full control over their AI interactions

LocalIntelligence puts powerful AI tools in your hands while respecting your privacy and giving you complete control over the experience.

Скриншоты

Что нового

- Версия: 1.2

- Обновлено:

- Added a slider in the General pane of the Settings window to define the default context window size that applies to all models. Previously, this value could only be set on a per model basis.

Цена

- Сегодня: Бесплатно

- Минимум: Бесплатно

- Максимум: Бесплатно

Отслеживайте цены

Разработчик

- Huibert Aalbers

- Платформы: macOS Приложения (2)

- Списки: 0 + 0

- Очки: 1 + 0 ¡

- Рейтинги: 0

- Отзывы: 0

- Скидки: 0

- Видео: 0

- RSS: Подписаться

Очки

0 ☹️

Рейтинги

0 ☹️

Списки

0 ☹️

Отзывы

Ваш отзыв будет первым 🌟

Дополнительная информация

- Версия: 1.2

- Категория:

macOS Приложения›Инструменты разработчика - Операционные системы:

macOS,macOS 15.5 и выше - Размер:

2 Mb - Поддерживаемые языки:

English - Возрастные ограничения:

4+ - Mac App Store Рейтинг:

0 - Обновлено:

- Дата выпуска:

Контакты

- 🌟 Поделиться

- Mac App Store

Вам также могут понравиться

-

- Local Strings

- macOS Приложения: Инструменты разработчика От: Tomoyuki Okawa

- Бесплатно

- Списки: 0 + 1 Рейтинги: 0 Отзывы: 0

- Очки: 0 + 0 Версия: 1.0.3 * See if Localizable.strings File Contains All Localized Strings Specified by a View Controllers * Developing macOS applications and iOS apps, I'm pretty sure that there are occasions ... ⥯

-

- Get Local

- macOS Приложения: Инструменты разработчика От: Mark Battistella

- Бесплатно

- Списки: 0 + 1 Рейтинги: 0 Отзывы: 0

- Очки: 0 + 0 Версия: 1.3.1 Never leave your user base without localised text. Export your localisations from Xcode and import them into Get Local. Select the strings you want to translate, choose from over 100 ... ⥯

-

- UTC and Local Time

- macOS Приложения: Инструменты разработчика От: Midwest Computer Solutions

- Бесплатно

- Списки: 0 + 0 Рейтинги: 0 Отзывы: 0

- Очки: 1 + 0 Версия: 2.0 Simple status bar app that shows UTC time and your local time based on your settings in system preferences. ⥯

-

- Jeeves - local HTTP server

- macOS Приложения: Инструменты разработчика От: Alexander Zats

- Бесплатно

- Списки: 1 + 0 Рейтинги: 0 Отзывы: 0

- Очки: 1 + 0 Версия: 1.1 Extremely flexible, efficient and simple local server. Did you ever get stuck on the train or in the plain with no connectivity and burning desire to work? I know I did! When I want to ⥯

- Новинка

- Stacker: Local Dev Environment

- macOS Приложения: Инструменты разработчика От: Yasin Kuyu

- Бесплатно

- Списки: 0 + 0 Рейтинги: 0 Отзывы: 0

- Очки: 0 + 0 Версия: 1.1.1 Stacker is the modern, standalone local development environment built for professional PHP and Web Developers. Say goodbye to slow VMs and complex Docker setups. Stacker runs native ... ⥯

-

- InboxFox - Local Email Tester

- macOS Приложения: Инструменты разработчика От: Andrew Miller

- * Бесплатно

- Списки: 0 + 0 Рейтинги: 0 Отзывы: 0

- Очки: 1 + 0 Версия: 1.0.1 InboxFox is a native macOS application designed for developers who need to test and debug email functionality in their applications. Instead of sending test emails to real addresses or ⥯

-

- PandaChef

- macOS Приложения: Инструменты разработчика От: Panda Intelligence Software Ltd

- Бесплатно

- Списки: 0 + 0 Рейтинги: 0 Отзывы: 0

- Очки: 0 + 0 Версия: 1.0.4 PandaChef is a powerful data processing and encryption toolkit designed for developers and data analysts. It provides a wide range of cryptographic algorithms, data conversion, and ... ⥯

-

- Xcode

- macOS Приложения: Инструменты разработчика От: Apple

- Бесплатно

- Списки: 22 + 8 Рейтинги: 5 (1) Отзывы: 0

- Очки: 11 + 0 Версия: 26.2 Xcode offers the tools you need to develop, test, and distribute apps for Apple platforms, including predictive code completion, generative intelligence powered by the best coding ... ⥯

-

- WebSSH - Sysadmin Toolbox

- macOS Приложения: Инструменты разработчика От: MENGUS ARNAUD

- * Бесплатно

- Списки: 3 + 2 Рейтинги: 0 Отзывы: 0

- Очки: 10 + 2,356 (4.7) Версия: 30.9 Whether you re on the go or at your desk, WebSSH keeps you connected anytime, anywhere! ٩(^ ^)۶ WebSSH is a powerful and user-friendly SSH, SFTP, Telnet, and Port Forwarding client for ⥯

-

- Transmit 5

- macOS Приложения: Инструменты разработчика От: Panic, Inc.

- * Бесплатно

- Списки: 6 + 2 Рейтинги: 0 Отзывы: 0

- Очки: 6 + 0 Версия: 5.11.3 The gold standard of macOS file transfer apps just drove into the future. Transmit 5 is here. Upload, download, and manage files on tons of servers with an easy, familiar, and powerful ⥯

-

- Monitor and Manage Search Ads

- macOS Приложения: Инструменты разработчика От: Remote Sunrise LTD

- Бесплатно

- Списки: 2 + 3 Рейтинги: 0 Отзывы: 0

- Очки: 14 + 2 (5.0) Версия: 2026.2 # SUPERCHARGE YOUR APPLE SEARCH ADS WORKFLOW SearchAds Manager gives performance marketers a fast, native, and secure workspace to manage every campaign, ad group, and keyword across ... ⥯

-

- C Notebook

- macOS Приложения: Инструменты разработчика От: 铖 邢

- * $0.99

- Списки: 2 + 2 Рейтинги: 0 Отзывы: 0

- Очки: 12 + 1 (5.0) Версия: 3.7.0 This is a notebook that integrates code and note functions. At present, the notebook supports C language writing and local debugging and running, and a series of excellent debugging ... ⥯

-

- mBoard

- macOS Приложения: Инструменты разработчика От: Mew Sang Lim

- Бесплатно

- Списки: 1 + 1 Рейтинги: 0 Отзывы: 0

- Очки: 16 + 0 Версия: 6.0.17 # Understanding KPI Dashboard Design for IoT and Digital Twins * mBoard is a low-code development platform that provides a development environment for creating application software, ... ⥯

-

- CodeRunner 4

- macOS Приложения: Инструменты разработчика От: Nikolai Krill

- $22.99

- Списки: 2 + 3 Рейтинги: 0 Отзывы: 0

- Очки: 9 + 0 Версия: 4.5 Whether you're new to coding or an experienced developer, CodeRunner is the perfect tool to write, run, and debug code quickly in any programming language. Enjoy essential IDE features ⥯